How professional services delay cures for patients.

Why SDV Sucks

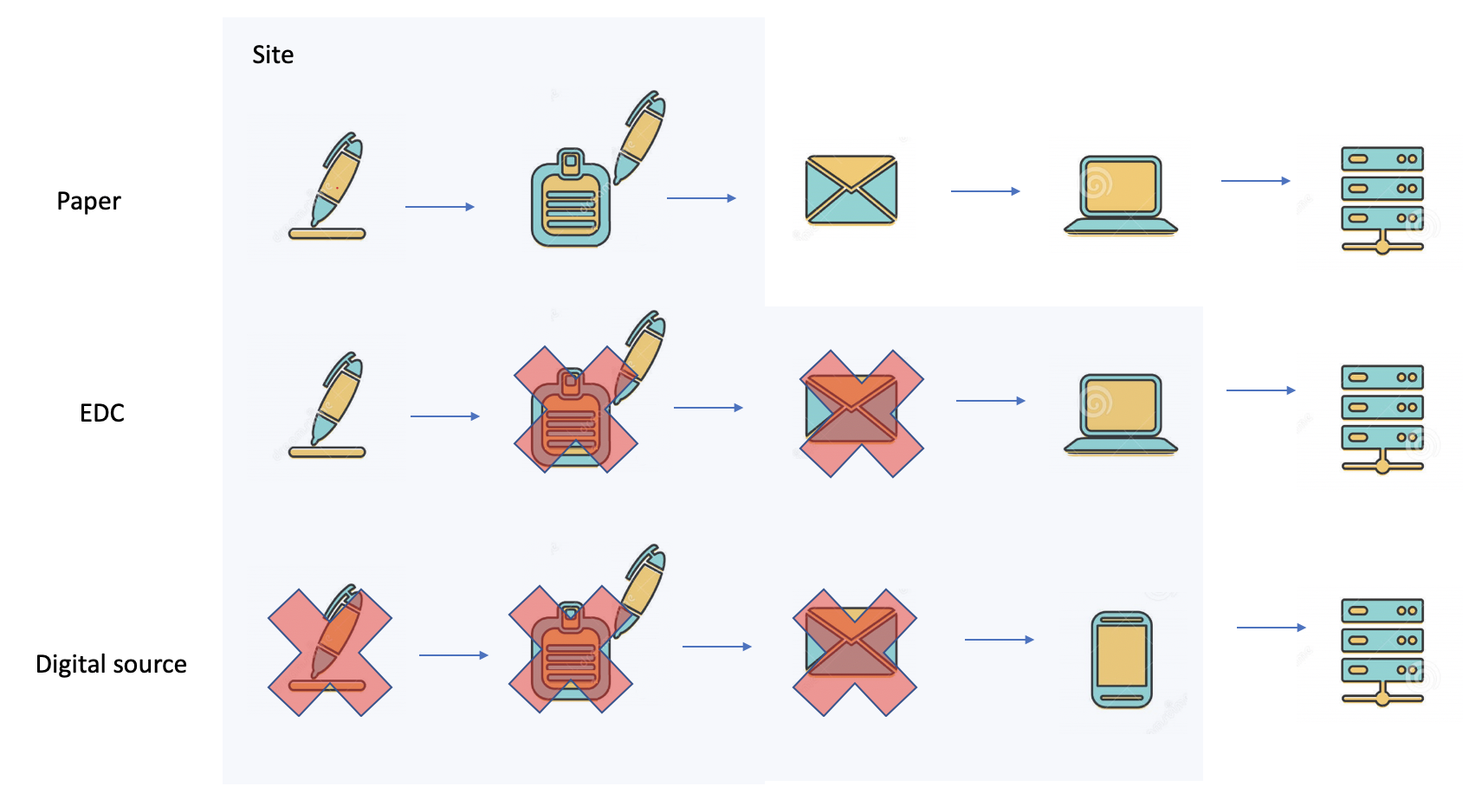

In the early 50s of the previous century, nobody knew that 70 years later clinical trials could be run using a phone. Clinical trials that were performed 70 years ago used paper forms. By the late 70s, batch-driven data processing systems were introduced. Paper forms were transcribed using punch cards and later interactive data-entry terminals. Source document verification was necessary in order to verify that the data transcribed from the papers to the punch cards was entered properly. By the early 90s, EDC systems with online edit checks were introduced. The online edit checks performed online data validation. Online edit checks are today, part of a well-designed data management process that ensures that clean data is entered into the EDC.

There is power in looking at situations with the mind of a nine-year-old who has no frame of reference and no notion of limits.

I’m paraphrasing Frank Slootman from his book – “Tape Sucks”.

My name is Danny Lieberman and I am an outsider with the attitude of a nine-year old with no sense of limitations and without a 70 year old frame of reference.

In this post, I will not bring the experience of multi-decade veterans in the clinical trials industry. It was obvious to me that SDV sucks, simply by looking at the history of computing.

Yes, SDV sucks. This post offers better ways to mitigate clinical trial risk without comparing pieces of paper to a computer report.

Back to the future – dateline Feb 2022

The UK Medicines & Healthcare products Regulatory Agency (MHRA) publishes guidance on clinical trial risk assessments, oversight and monitoring.

The MHRA guidance represents a departure from the traditional approach of aiming to assess compliance by using 100% SDV to check every data point against source documents.

With typical British understatement, MHRA said that way of working is “extremely resource intensive.” The risk-based approach is intended to protect the rights, safety and wellbeing of the clinical trial participants and the reliability of the results without checking everything.

Basically, even the British MHRA guidance is saying that SDV sucks.

See the MHRA guidance on clinical trial risk assessment

You cannot outsource data quality and patient compliance in your clinical trial

Collecting low-quality data means that your trial is likely to fail. You will not be able to prove or disprove the scientific hypothesis of your clinical trial. You will have wasted your time.

You cannot outsource quality, you have to build it into the trial design

A clinical trial is a scientific experiment not an outsource payroll application

Clinical development is scientific experimentation designed to improve human health and well-being by identifying better drugs, medical devices and interventions to treat, cure or prevent illness. The scientific part of this statement refers to a systematic design of the experiment in such a way that the experiment can prove or disprove a scientific hypothesis regarding the efficacy and/or safety of the product. In fact, in many cases, a study is a stepping stone to the next study. For example, collecting data that will be used to train an algorithm of a medical device that is supposed to detect a particular illness in a faster and better manner than conventional diagnostic procedures.

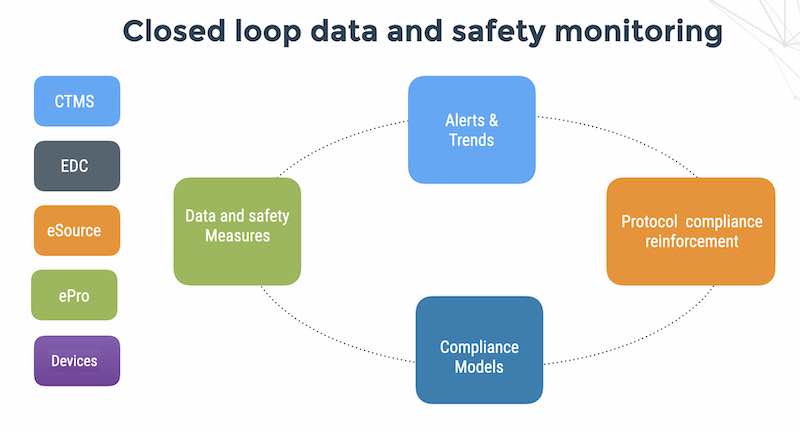

Acquiring high quality data in your medical device clinical trial requires three things:

We start by understanding that SDV sucks at the mission of data modeling and monitoring.

1. A well-thought-out data model of your study

2. High quality data collection

3. Automated monitoring of exceptional clinical data events

10 ways to reduce the risk of collecting low-quality data

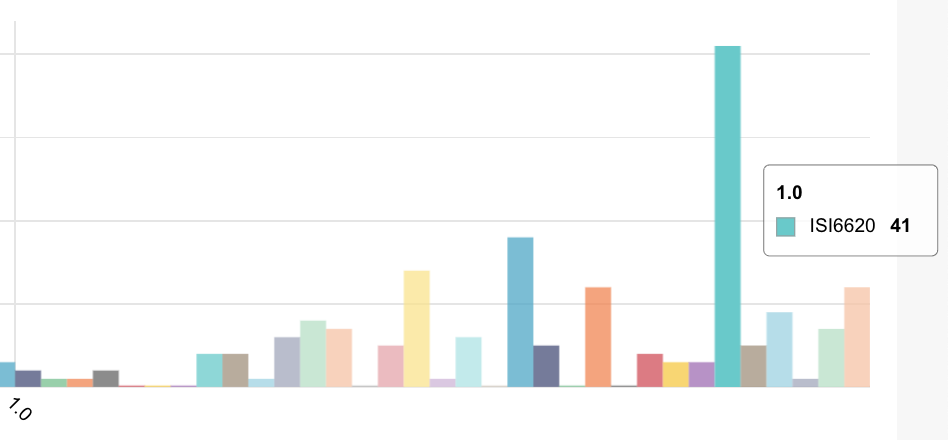

1. Measure a CRC time metric – this metric refers to how long it takes the site CRC (clinical research coordinator) to enter the data into your EDC system after the patient visits the site. This metric should be 1-2 days. Anything more than that and your site staff are losing touch with the data.

2. Measure non-compliant subjects that continue to visit the site. This is a blatant protocol violation. Source document verification cannot detect non-compliance.

3. Measure informed consent CRFs that were not signed. Another blatant protocol violation of GCP that can result in the results of your study being called into question.

4. Measure data entry of future events. Are sites entering visits in advance of them actually happening? This is a sign of sloppy work at best and it only goes downhill from there. We’ve seen sites entering early termination in advance of the event which can’t be good news. Source document verification cannot detect future events.

5. Measure a CRA time metric – this metric has 2 parts; part 1 is how often your CRO/CRA actually visits the site as measured by actual logins to your EDC system while part 2 is how long it takes for them to do source document verification and log the results to your EDC system. A good rule of thumb for CRA time metric part 1 should be 14-21 days and for CRA time metric part 2 – no more than 30 days depending on the speed of the study. 90 days and above for this metric means that your CRO is not doing their job and you’ve lost touch with your data quality. What you can’t observe, you can’t measure and no one likes being in the dark.

6. Measure a query rate metric – too many queries is not a good sign. Large numbers of data validation queries is a sure indicator that you have problems with your data model. Large numbers of site queries that are closed automatically by the site monitors can be a sign of training issues and/or apathy. Source document verification is not a risk control for poor systems or under-performing sites.

7. Measure 1 thing that is totally out of left field – this will be a metric that your gut tells you must mean something. For example, in a psychiatric study, if subjects are suicidal as measured by site comments recorded in the EDC queries – you would not expect the study monitors to close this sort of query with a “resolved” status.

8. Honor and suspect – this is a literal translation from the original Hebrew כבדהו וחשדהו. In my experience, Americans (in particular) tend to be very PC when it comes to CRO oversight. I could write a book about this – but suffice it to say, collect your metrics and keep your CRO on their toes. As I wrote here, if CROs are part of the problem, how can they be part of the solution?

9. KISS – Keep it Simple Stupid: Implement KISS in the data model design of your experiment. Resist at all costs, the temptation to collect lots of extra data points just because you can. Remember – the site CRC is your best friend. More data means less attention to quality. When the data model is overly complex, such as having too many parameters relative to the number of data points collected, over-fitting will occur which means that your model can describe anything include the noisy data that your sites collected. The same thing goes for data validation edit checks. Too many edit checks can hobble the sites from on-time data entry, and on-time data entry is your number 1 quality metric. KISS is one more reason that SDV sucks.

10. Use FlaskData, and get all these things for free. FlaskData is a secure, fully managed Digital CRO service for collecting, storing, monitoring, searching and analyzing clinical data in the cloud for life science companies who need the highest level of support and commitment to their study success.